Applied Machine Learning in Python and R, STP 494, Spring 2023

Course Information

Project is due 5/5/2023:

Just email me pdf of the project. Make sure all group member names are clearly indicated!!

Tu Th 1:30 PM - 2:45 PM 1/9/23 - 4/28/23 Tempe DH 218

Instructor/TA:

Instructor: Robert McCulloch, robert.mcculloch@asu.edu

TA: Andrew Herren, asherren@asu.edu

TA office hours:

Hi Rob,

Just wanted to let you know that my office hours are Tuesday 3:00-4:30 and Thursday 2:00-3:30 in WXLR 303.

Feel free to let the students know that they can come by and ask me questions then (when they're not in one of your classes).

I am also available to the students by email, so they can also feel free to email me with questions.

Best,

Drew

How-to-use-Canvas-Discussions.pdf

Where we are and what I should be doing?

where and what

Miscellaneous

The Ultimate Scikit-Learn Machine Learning Cheatsheet

Scikit-Learn Cheat Sheet (2021)

Syllabus

Syllabus

Some useful books:books

Homework

How_to_Submit_Homework_in_Canvas.pdf

Homework 1

Let's say homework 1 is due February 6.

Homework 1 in Python

hw1-P.ipynp

Homework 1 in R

hw1-R.Rmd

python solutions

R solutions

simple ggplot with 4 variables

Homework 2

Homework 2

Suggested due data: February 17.

Homework 3

Homework 3, optimization

Suggested due data: After spring break.

Homework 4

Homework 4, Polynomial regression and the LASSO

Suggested due data: After spring break.

Homework 5

Homework 5

due 5/5/2023.

R solutions to homework 5, R script

R solutions to homework 5, html

python solutions to homework 5, python script, just simple tree for y is price, x is mileage

Notes

Readings:

Chapter 2 of either ISLR (Introduction to Statistical Learing)

and/or Chapter 2 of ESL (Elements of Statistical Learning)

would be helpful for the first two sections of notes.

But just kind of skim, you don't need to understand everthing in these

chapters at this point.

Note: I will sometimes give you the R code I used to make the notes.

In the R code, I use the following files of simple R functions:

robfuns.R

rob-utility-funs.R

mlfuns.R

lift-loss.R (simple lift functions and deviance loss)

So, I might have the line source("../../robfuns.R") near the top of a script.

Simply replace the ../../ with the correct path to where you have put the file.

You may also see source("notes-funs.R").

This is just to write stuff out in a way I can easily pop into a latex script.

It just has one function printfl which you can replace with a simple R print.

For completness here is the file:

notes-funs.R

Note that often my scripts are designed so that if you set dpl=FALSE at the top,

then you can just source the script and the whole thing will run.

If dpl=FALSE then printfl is just print.

This setup may look weird, but the scripts are actually designed to run in batch mode.

Probability Review and Naive Bayes

Naive Bayes in R:

Simple Illustration of Naive Bayes on the sms data (pdf)

Simple Illustration of Naive Bayes on the sms data (Rmd)

This an ascii R script where I play around with the Naive Bayes text analysis in more detail:

naive-bayes_notes.R

Naive Bayes in Python:

NB_in_python.html

NB_in_python.ipynb

KNN and the Bias Variance Tradeoff

KNN and the Bias Variance Tradeoff

Simple version of R code in notes

Python code to replicate what is the notes for the Boston example using sklearn

R script to illustrate the bias-variance tradeoff

Simple R code to do cross validation: docv.R

Simple R code to get fold id's for cross validation

R users might want to check out the caret package

Note:

Both ESL and ISLR have an introductary overview chapter 2 in which general ideas are discussed,

then do regression and some other basic models and then later

discuss the practial (e.g. cross validation) and theoretical (e.g MLE) ideas

(ISLR Chapter 5, ESL Chapters 7 and 8).

I would encourage you do ``skip ahead'' and read/skim the discussion of cross-validation (and other topics).

the curse of dimensionality

More Probability, Continous Random Variables

More Probability, Continous Random Variables

MLE and Optimization

MLE and a little optimization

Recorded lecture, sections 1-3

Recorded lecture, section 4, Projecting onto a vector

Recorded lecture, section 5, Finding a minimum, several variables

Recorded lecture, section 6, Maximum Likelihood, the normal

Recorded lecture, sections 7-10, Multinomoulli and constrained optimization

Regularized Linear Regression:

Linear Models and Regularization

The 1se rule

Note the excellent Resources from ISLR:

Check out the webpage : https://www.statlearning.com/

In particular, Sections 6.1 and 6.2 cover subset selection and ridge and LASSO.

Here is the link to the ISLR lecture notes: ISLR lecture notes.

Here is the link to ISLR lab for R: chapter 6, R lab

Here is the link to ISLR lab for R, html: Chapter 6, R, html

Recorded lecture, sections 1 and 2, Introduction, Matrix notation, and least squares

Recorded lecture, section 3, maximum likelihood

Recorded lecture, sections 4 and 5, subset selection and AIC/BIC

Recorded lecture, section 6, Shrinkage-L2, Ridge Regression

Simple python script to do Ridge and Lasso

Simple python script to do Ridge and Lasso, html

Simple R script to do Ridge and Lasso

Simple R script to do Ridge and Lasso, html version

Properties of Linear Regression

Properties of Linear Regression (.Rmd)

R script to illustrate all subsets regression is package leaps

R script for ridge and lasso using glmnet, Hitters Data.

R script for reading in diabetes data and looking at y.

R script for Lasso on Diabetes.

R script for comparing Lasso,Ridge,Enet.

R script for forwards stepwise on Diabetes.

do-stepcv.R: R functions for doing stepwise.

R script to learn about formulas and model.matrix

(see Chapter 11, Statistical models in R, in the R-introduction Manual)

Note: AIC and BIC can be confusing. You can see different versions of the formulas.

Since you pick the smallest one, versions that differ by a constant are all correct.

This discusses things correctly and tells you how it works in R:

Cp, AIC, BIC or

the web link .

This link shows how confused the AIC vs. BIC discussion is:

AIC vs. BIC

R script for seeing Ridge vs Lasso in simple Problem.

R script for plotting Ridge and Lasso shrinkage (thresholding function).

R script to see Lasso coefs plotted against lambda.

R script to see Ridge coef plotted against lambda.

Logistic Regression

Regularized Logistic Regression

Simple script to illustrate regularized logit in R

Simple script to illustrate regularized logit in python

R script for Regularized logit fit to simulated data.

R script Lasso fit to w8there data.

R script Ridge fit to w8there data.

Classification Metrics

Classification Metrics

fglass.R: script using the forensic glass data

tab.R: script using the tabloid data

Trees

Note the excellent Resources from ISLR:

Check out the webpage : https://www.statlearning.com/

In particular, Chapter 8 covers tree based methods including random forests and boosting.

Here is the link to the ISLR lecture notes: ISLR lecture notes.

Here is the link to ISLR lab for R: chapter 8, R lab

Here is the link to ISLR lab for R, html: Chapter 8, R, html

simple tree in R

simple tree in python

Random Forests and Gradient Boosting on the California Housing Data in python

Classification with Logit, Trees, Random Forests, and Gradient Boosting in sklearn

xgboost in python

tree-bagging.R

knn-bagging.R

boost-demo.R

R package for plotting rpart trees

Neural Nets

Lectures:

Recorded Lecture: Introduction to Recurrent Neural Networks

Recorded Lecture: Basic Model for Recurrent Neural Networks

Recorded Lecture: IMDB example with Recurrent Neural Networks

Recorded Lecture: Market Volume example with Recurrent Neural Networks

Deep Learning review by LeCun, Bengio, and Hinton in Nature

Note the excellent Resources from ISLR:

Check out the webpage : https://www.statlearning.com/

In particular, Chapter 10 covers Neural Nets.

Here is the link to the ISLR lecture notes: ISLR lecture notes.

Here is the link to ISLR lab for R keras: chapter 10, R lab, keras

Here is the link to ISLR lab for R torch: chapter 10, R lab, torch

Here is the link to ISLR lab for R keras, html: Chapter 10, R keras, html

Here is the link to ISLR lab for R, torch html: Chapter 10, R torch, html

Software issues are more involved for Neural nets compared to most other Machine Learning topics because of

the computational issues involved in fitting deep networks.

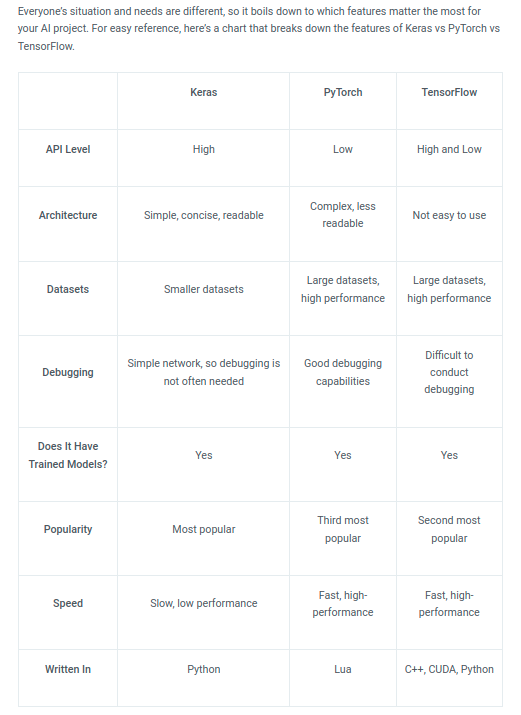

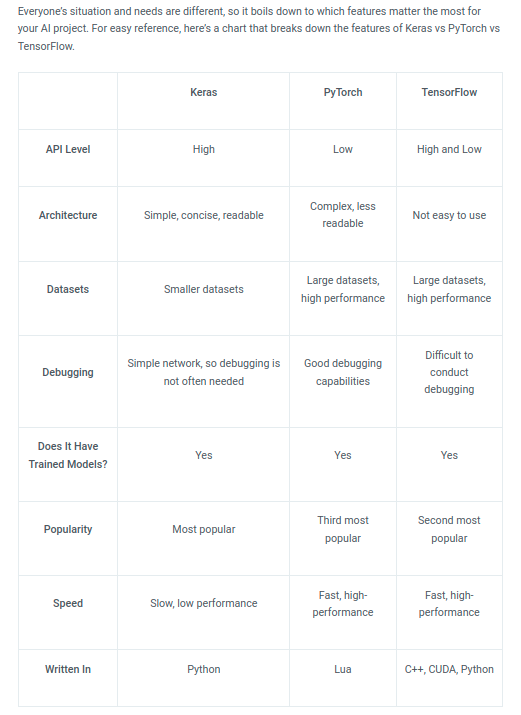

The software you most commonly hear about are tensorflow, keras, and torch.

keras is actually an Application Programming Interface (API) written in Python which provides a relatively simple way to work with neural nets.

tensorflow is the heavy hitter, but it is harder to use.

Keras was adopted and integrated into TensorFlow in mid-2017. Users can access it via the tf.keras module.

However, the Keras library can still operate separately and independently.

torch (pytorch in python) is more programmable and powerful than keras but harder to use.

There are versions of keras and torch in both python and R.

I stole this from simplilearn.com.

R users:

The website for the ISLR book https://www.statlearning.com has excellent resources for doing neural nets in R.

The lab for "Chapter 10. Deep Learning" in the book is written in keras but at the webpage the have both keras and torch versions.

If you click on /Resources/Second Edition at the ISRL website you will get to

https://www.statlearning.com/resources-second-edition.

Then click on "A Note About the Chapter 10 Lab" and you will get to links and resources for installing neural net software in R.

The ISLR webpage makes it sound like keras is harder to install because it uses reticulate to call python but

torch is much slower.

See also https://keras.rstudio.com/ for keras.

See R torch package.

In particular notice the vignette links

(e.g installation, torch installation).

To get torch to install in R, I had to use "install_torch(timeout = 600)" as discussed in the installation vignette.

Another useful link for torch in R is Torch for R

See also https://tensorflow.rstudio.com/install/ which claims to tell you how to install tensorflow for R.

Note also that there are R packages "install.packages("neuralnet")"

and "install.packages("nnet").

Nice tutorial on neural nets in R and the neuralnet R package

The nnet package is easy to use and has been around a long time (good thing!) but only does a single hidden layer.

Python users:

You should check out google colab, pretty awesome.

google colab

Works just like a jupyter notebook.

All setup to run keras, pytorch, or whatever.

Check out /Runtime/Change runtime type/Hardware accelerator.

Examples in R with keras

simple example of R package magrittr used in keras to pipe

First example in ISLR lab with Hitters data

keras_simple-Boston-lstat.R

Simple Boston Housing example, single layer, L2, keras, in R

See "Deep Learning in Python, by Chollet", or "Deep Learning with R, Chollet and Allaire".

Examples in R with torch

First example in ISLR lab with Hitters data

Examples in Python with keras

First example in ISLR lab with Hitters data, python version

Simple Boston Housing example, single layer, L2, keras, in python

In python, Movie Reviews example in keras with 2 layers

In python, simple example of mnist data using keras

In python, IMDB example from section 3.4 of Chollet book modified to create some of the stuff in the notes.

Examples using R nnet package

Simple example of using nnet with Boston data (y=medv, x=lstat) and Default data.

Notes on a single layer using R package nnet

Single Layer Neural Nets (R code)

Single Layer Neural Nets XOR (R code)

plot.nnet.R

pytorch example with susedcars data

pytorch_susedcars.py

To learn pytorch you should work through the tutorials at the pytorch website pytorch tutorials

Then the book "Deep Learning with PyTorch" by Stevens, Antiga, and Viehmann is good.

I also found the intro chapters of "Modern Computer Vision with PyTorch" by Ayyadevara and Reddy helpful.

Some nice websites

Good discussion of Back-prop

Nice website with an overall discussion and pictures of the uncovered features

Nice visualization of a neural network

Nice tutorial on NN in R and the neuralnet R package

R-bloggers on classifying digits with keras in R

Rob notes, Back propogation

Backpropagation

Keras Cheat Sheet

Clustering: Hierarchical and K-means

Lectures:

Undirected Learning

Cereal Data

Distance

kmeans

Dimension Reduction: Principal Components and the Autoencoder

Lectures:

Introduction

Principal Components

Autoencoder

Introduction to Bayesian Statistics:

Introduction to Bayesian Inference and Monte Carlo

Introduction to Bayesian Statistics and the Beta/Bernoulli Inference

Introduction to Bayesian Regression

Bayesian Regression and Ridge Regression

Bayesian Additive Regression Trees

Introduction to BART

Old BART talk

Latent Dirichlet Allocation

R

Information on R

Python

Information on Python

Sample Projects

Project is due 5/5/2023:

Just email me pdf of the project. Make sure all group member names are clearly indicated!!

A nice project option is the drug discovery data I used in ``BART: Bayesian Additive Regression Trees''.

The data is on Rob's data page: rob's data page.

Here is the BART paper where we used the Drug Discovery data, see section 5.3:

BART paper

Some old projects:

Binary Classification for Heart Disease

HEART DISEASE PREDICTION

Predicting Soybean Yield (pdf)

Predicting Soybean Yield (Rmd)

Drug Discovery Data

Credit Card Fraud

Autoencoder on the MNIST Data

Cancer Classification using Microarray Data

Note that the data for this project is on Rob's data webpage, search for Janss.

In general, a project could have sections:

- Introduction: what is the goal of the study (e.g. what is x and y and why do I care)

- Data: description of data and data processing (what are n and p? categorical x/y?)

- Outline of plan of attack, (what methods will you try?)

- results (tables and graphs showing what you found with discussion)

- conclusion (brief summary of what you found)

Data

Rob's Data Web Page

Sources for example data sets:

This has many data sets collected from different R packages but smallish n and p:

R data sets

Note:: this copied from page 34 of

``Hands-On Machine Learning with Scikit-Learn and TensorFlow'' by Geron.

UC Irvine Machine Learning Repository

Kaggle Data Sets

Amazon's AWS datasets

Meta Portals

dataportals.org

open data monitor