Computational Statistics, STP 540, Spring 2023

Basic Course Information

Final Project is due 5/5/2023:

Just email me pdf of the project. Make sure all group member names are clearly indicated!!

Class time and place

Tu Th 12:00 PM - 1:15 PM 1/9/23 - 4/28/23 Tempe WXLR A304

Instructor: Robert McCulloch, robert.mcculloch@asu.edu

TA: Andrew Herren, asherren@asu.edu

Hi Rob,

Just wanted to let you know that my office hours are Tuesday 3:00-4:30 and Thursday 2:00-3:30 in WXLR 303.

Feel free to let the students know that they can come by and ask me questions then (when they're not in one of your classes).

I am also available to the students by email, so they can also feel free to email me with questions.

Best,

Drew

How-to-use-Canvas-Discussions.pdf

Syllabus: Syllabus

Some usefull books: books

Where we are and what I should be doing?

where and what

R and Python

Information on R

Information on Python

Suggested Projects

Inference for the parameters of a Gaussian Process

See Murphy chapter 15.1 to 15.2.5. Murphy.

See also Rasmussen-and-Williams.pdf.

See also chapter 5 of the book "Surrogates" by Robert Gramacy.

Learning a single layer neural network

see section 10.7 Fitting a Neural Network in "An Introduction to Statistical Learning", second edition

by James, Witten, Hastie, and Tibshirani.

Simple Chain Rule Gradient Computation for a Single Layer

Single Layer Neural networks, complete notes from Applied Machine Learning

EM algorithm for a mixture of normals

Some old projects:

Comparing the EM algorithm with the Gibbs sample for uninvariate normal mixtures

Gaussian Processes

Comparing the EM algorithm with Gibbs for univariate mixtures

Monte Carlo EM algorithm

Homework

How_to_Submit_Homework_in_Canvas.pdf

Homework 1

Due February 6.

Homework 2

Due February ??.

Kevin Murphy book on Machine Learning

Solutions

Homework 3

Homework 3, solutions

logit-funs.R

Notes

A first look at simple logistic regression

Let's review a basic nonlinear model in statistics: simple logistic regression.

We will write simple code to compute the likelihood.

We will look the idea of vectorization which applies in both R and python.

Later we will go into more details on how the likelihood is optimized.

Simple vectorized summing in python

Simple Logistic Regression Likelihood

Simple example of logit in R, Rmd

Simple example of logit in Python, notebook

Script to compute the log-likehood:

R code: Logit Example in R

Python code: Logit Example in Python

Plot logit likelihood using color palettes (e.g. viridis) in R

Advanced R, Wickham, Section 24.5.

".. vectorization means finding the existing R function that is implemented in C

and most closely applied to your problem."

Simple note on vectoriziation in python: vectorization in python

Of course, if you code directly in a lower level language like C++ you get the speed:

Calling C++ out of R using, Rcpp, a Makefile, and SHLIB

Calling C++ out of R using Rcpp using rstudio

Matrix Decompositions in Statistics

Quick Review of Some Keys Ideas in Linear Algebra

Simple python script to compare sklearn.Linear regression with (X'X)^{-1} X'y

Simple R script to compare lm with (X'X)^{-1} X'y

What Really IS a Matrix Determinant?

QR Matrix Factorization

Least Squares and Computation (with R and C++)

The Multivariate Normal and the Choleski and Eigen Decompositions

Look at cholesky and spectral in R

Singular Value Decomposition

simple example of svd in python

do_image-svd-approx.py

image approximation with SVD in R, thanks to Andrew Ritchey.

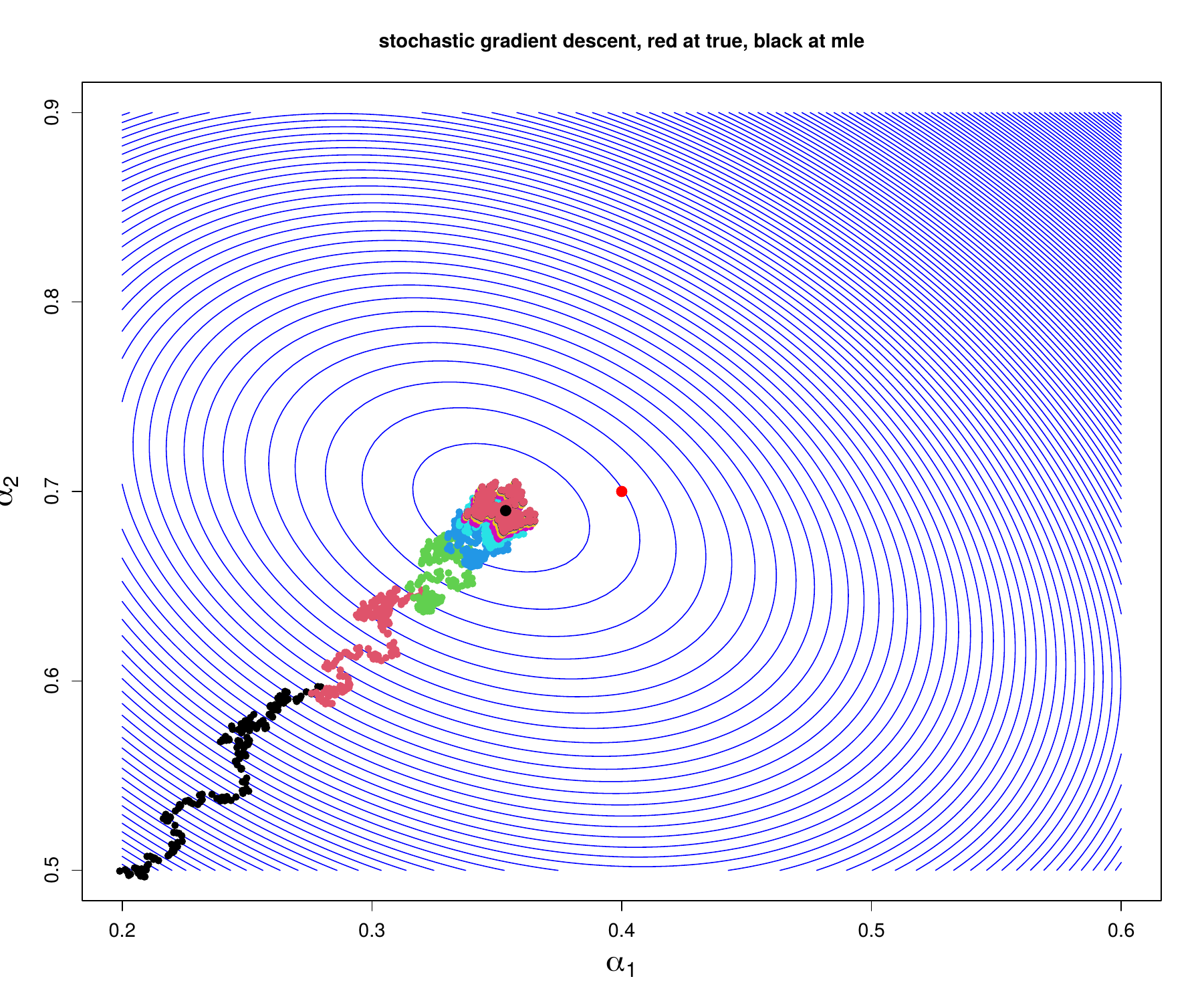

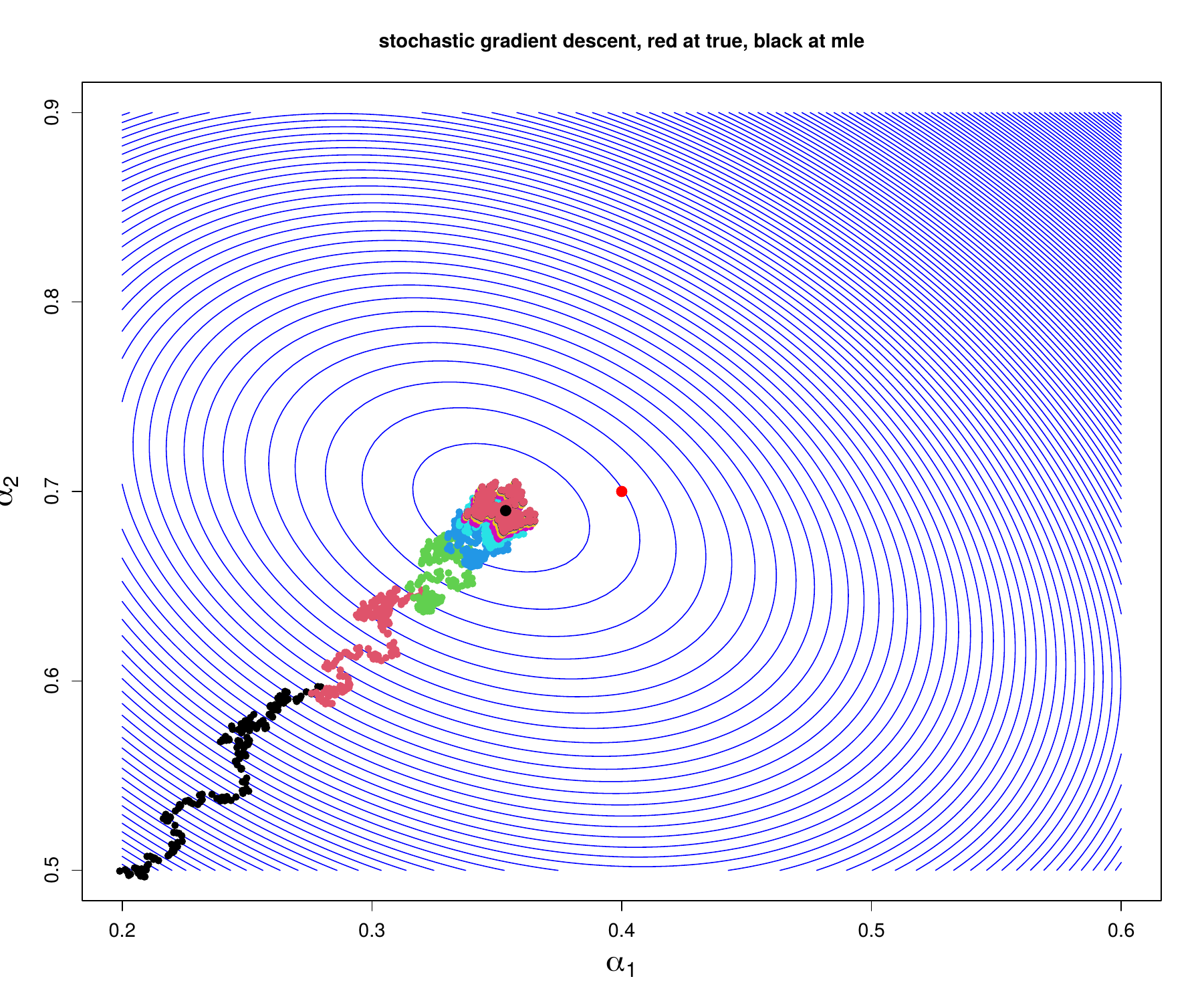

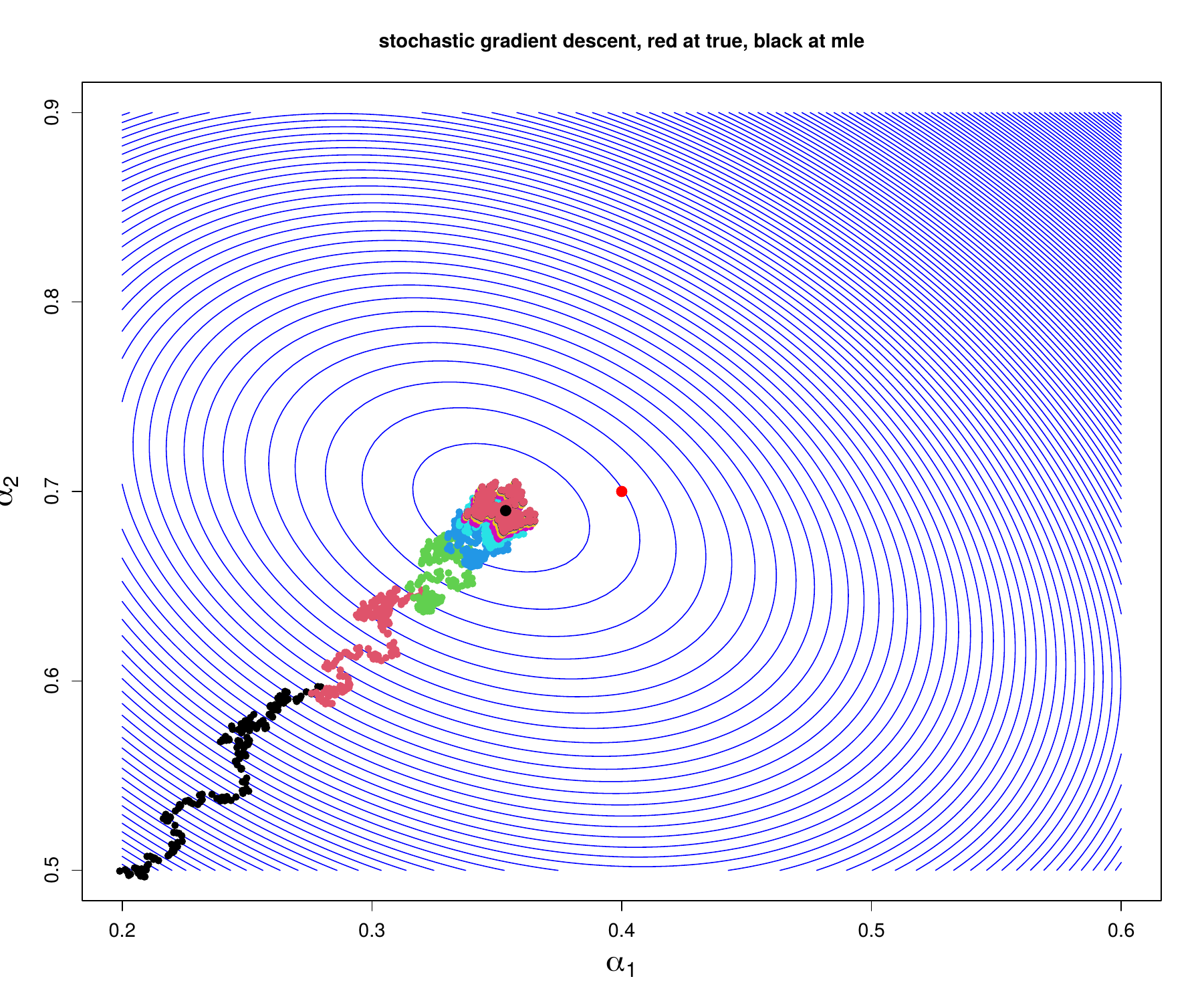

Optimization

Optimization

See chapters 4 and 8 of "Deep Learning" by Goodfellow, Bengio, and Courville.

Simple notes on single layer neural net: Single Layer

Section 3 recording, logit derivatives: recording

Section 4 recording, Taylor's Theorem: recording

Sections 8 and 9 recording, Momentum and Newton's method: recording

Mixture Models and the EM Algorithm

The EM Algorithm

See Chapter 11 of Murphy.

See Chapter 4 of Givens and Hoeting.

See Chapter 8.5 of Hastie, Tibshirani, and Friedman.

Introduction to Bayesian Statistics

Introduction to Bayesian Statistics and the Beta/Bernoulli Inference

Normal Mean Given Standard Deviation

Normal Standard Deviation Given Mean

Multinomial outcomes with the Dirichlet conjugate prior

Introduction to Bayesian Regression

Monte Carlo

Monte Carlo

See Chapter 6 of Givens and Hoeting.

Geweke paper

R script to try various truncated normal draws

Rmarkdown version, of R script to try various truncated normal draws

R script to try various importance sampling approaches for prior sensitivity

Rmarkdown version, of R script to try various importance sampling approaches and SIR for prior sensitivity

Prior based on odds ratio

SIR R script

MCMC: Markov Chain Monte Carlo

See Chapter 7 of Givens and Hoeting.

Markov Chains

Simple Example of a Markov Chain

Gibbs Sampling

Gibbs Sampling for hier means

Note: Hoff refers to ``A First Course in Bayesian Statistical Methods'', by Peter Hoff

Reversable Markov Chains

The Metropolis Algorithm

MH example with for normal data with non-conjugate prior on mean

Recorded Lectures

Gibbs sample for normal (mu,sigma)

General Gibbs sampler and introduction to hierarchical normal means

Gibbs Sampler for Hierarchical means

Metropolis Hastings Algorithm

State Space Models and FFBS

Hotels Problem

intro to state space models

FFBS

R code for hotels example

Forward Filtering for Simple Hotels model

The Bootstrap

(Efron and Hastie, chapters 10 and 11)

The Bootstrap

Thompson Sampling

Tutorial on Thompson Sampling

BART

Introduction to BART

Old BART Talk

Bayesian Additive Regression Trees, Computational Approaches

chapter in Computational Statistics in Data Science